VXLAN with static ingress replication and multicast control plane

This is the first part of a series covering VXLAN on NEXUS devices. Various control-plane approaches will be covered.

In this first part, unicast and multicast control-plane is discussed and in our next post, we’ll discuss one VXLAN using MP-BPG. Each of these have advantages and disadvantages.

The purpose of this series is to show how you can configure each method and how the traffic is forwarded.

VXLAN Tunnel Endpoint(VTEP): end of a VXLAN segment that performs encapsulation and de-encapsulation

Virtual Network Identifier(VNI): a VXLAN segment on 24 bits

Network Virtualization Edge(NVE): the overlay interface to define VTEPs.

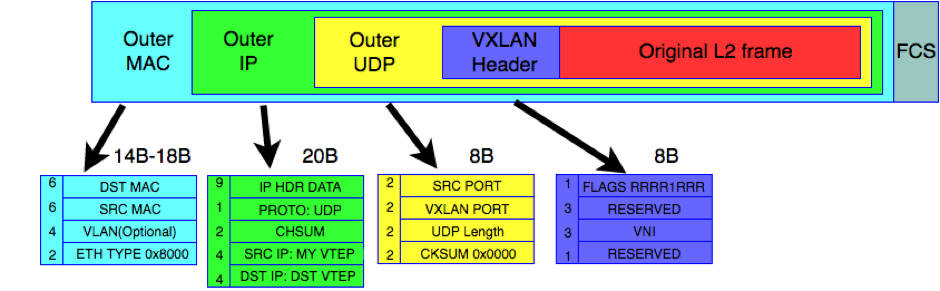

These are the fields of an Ethernet frame carrying a VXLAN frame.

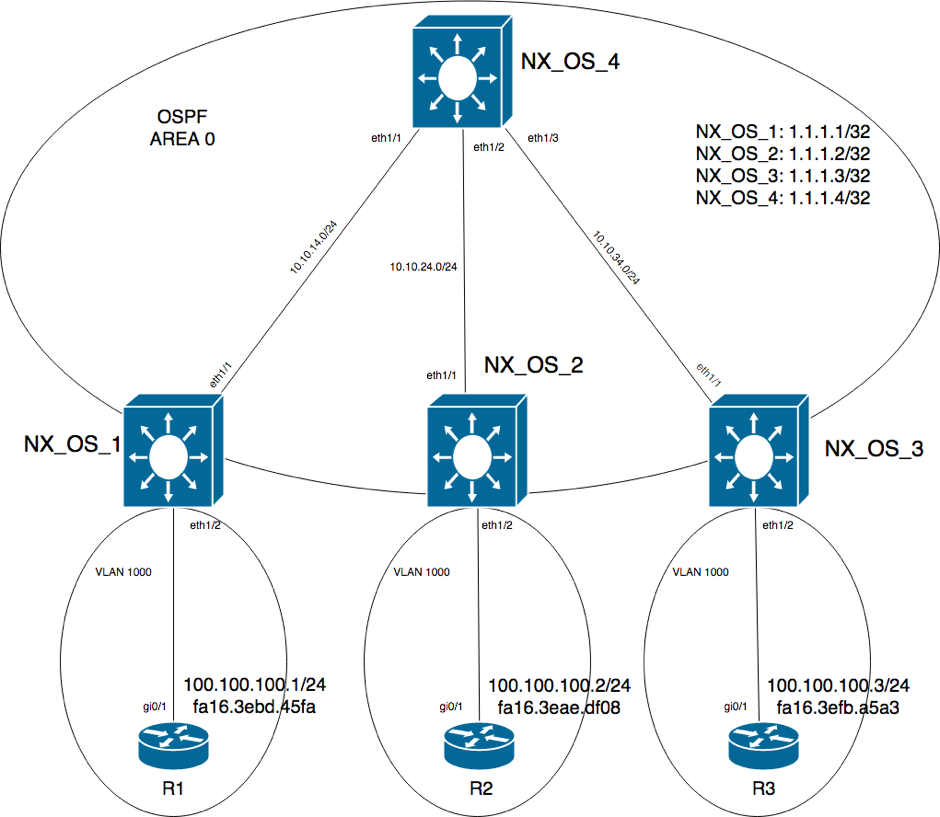

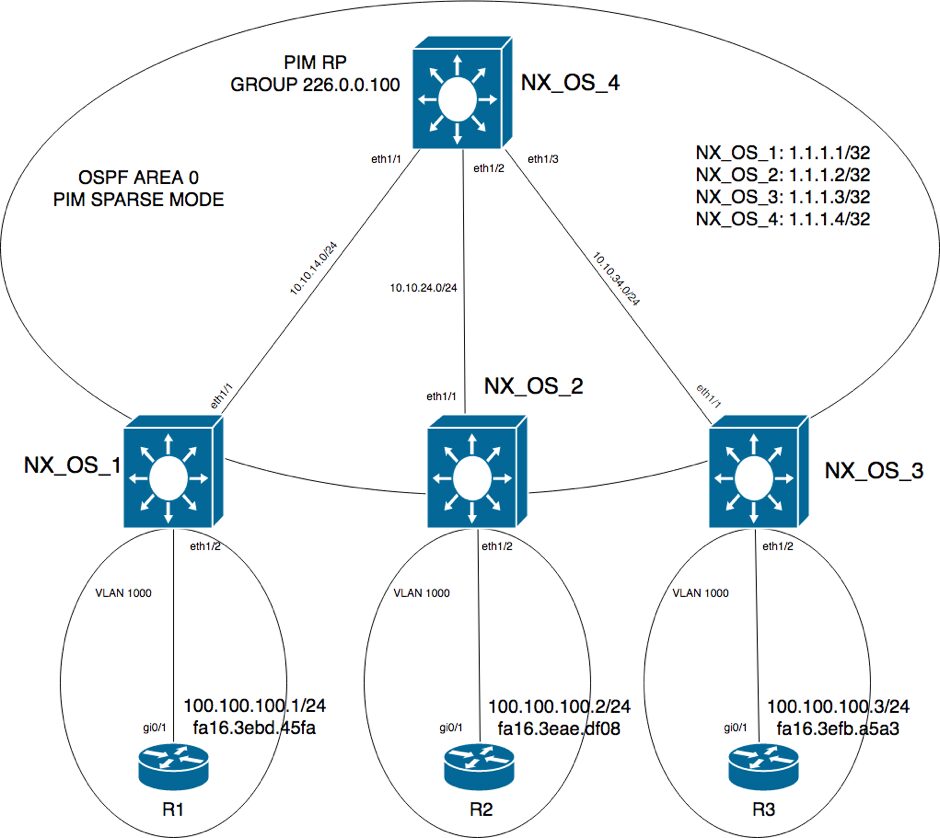

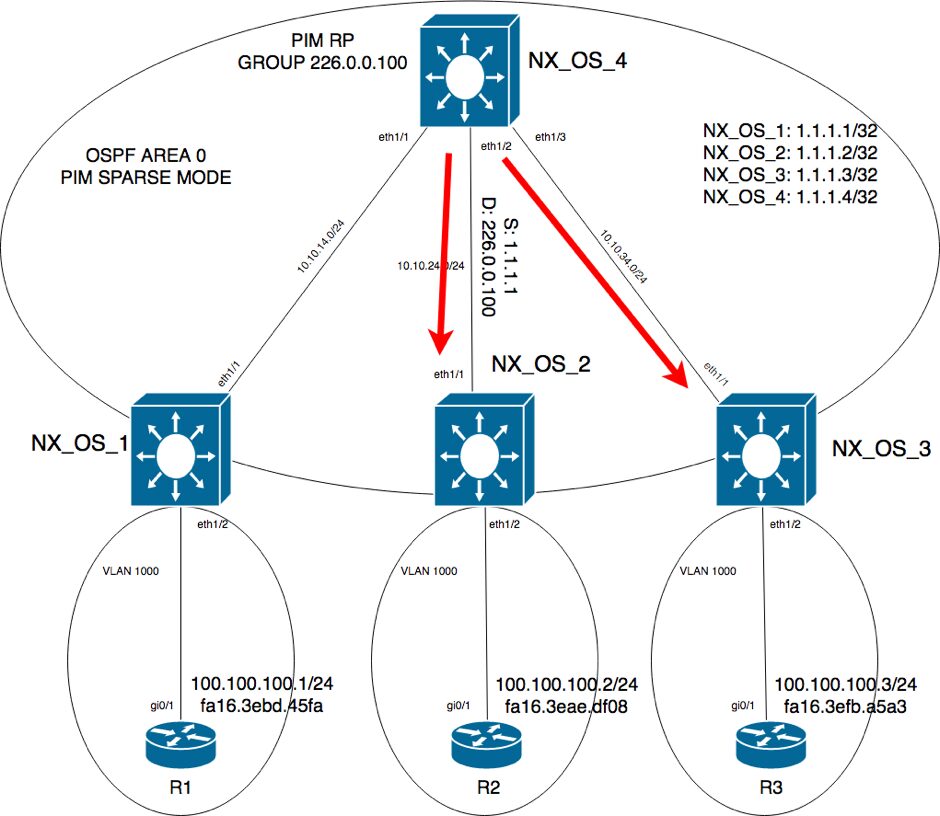

The first part of this article will cover simple VXLAN and this is the topology:

The NEXUS devices are all running an IGP for loopback interfaces reachability and all the traffic between the edge NEXUS devices must go through NX_OS_4.

These are the OSPF routes on NX_OS_4 and similar output is found on all the other devices.

NX_OS_4# show ip route ospf-1 IP Route Table for VRF "default" '*' denotes best ucast next-hop '**' denotes best mcast next-hop '[x/y]' denotes [preference/metric] '%' in via output denotes VRF 1.1.1.1/32, ubest/mbest: 1/0 *via 10.10.14.1, Eth1/1, [110/41], 00:07:04, ospf-1, intra 1.1.1.2/32, ubest/mbest: 1/0 *via 10.10.24.2, Eth1/2, [110/41], 00:10:01, ospf-1, intra 1.1.1.3/32, ubest/mbest: 1/0 *via 10.10.34.3, Eth1/3, [110/41], 00:09:55, ospf-1, intra NX_OS_4#

R1, R2 and R3 are all in the same VLAN, VLAN 100.

NX_OS_1# show vlan id 100 VLAN Name Status Ports ---- -------------------------------- --------- ------------------------------- 100 VLAN0100 active Eth1/2 VLAN Type Vlan-mode ---- ----- ---------- 100 enet CE Remote SPAN VLAN ---------------- Disabled Primary Secondary Type Ports ------- --------- --------------- ------------------------------------------- NX_OS_1#

So far, everything is as expected and to enable VXLAN, several things are required:

The first one is to enable VXLAN and overlay features:

NX_OS_1# show running-config | i feature feature ospf feature vn-segment-vlan-based feature nv overlay NX_OS_1#

Next, the vn-segment ID under the VLAN:

NX_OS_1# show running-config vlan !Command: show running-config vlan !Time: Tue Dec 12 14:35:17 2017 version 7.0(3)I6(1) vlan 1,100 vlan 100 vn-segment 10100 NX_OS_1#

And finally, to create the overlay interface and specify the ingress replication type along with the peers.

This is for NX_OS_1:

NX_OS_1# show running-config nv overlay !Command: show running-config nv overlay !Time: Tue Dec 12 14:33:00 2017 version 7.0(3)I6(1) feature nv overlay interface nve1 no shutdown source-interface loopback0 member vni 10100 ingress-replication protocol static peer-ip 1.1.1.2 peer-ip 1.1.1.3 NX_OS_1#

An almost identical configuration is found on NX_OS_2 and NX_OS_3, with the difference of peers identifier.

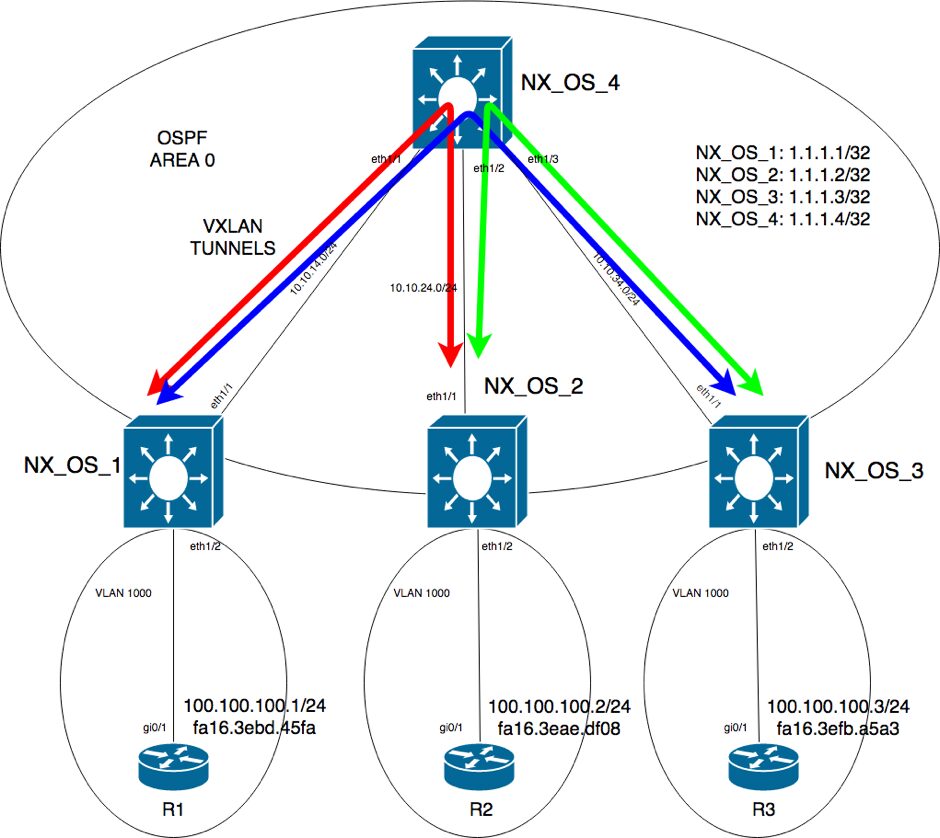

Once this configuration is applied, two tunnels from each router going to the other two routers will be created:

This is the overlay interface:

NX_OS_1# show nve interface Interface: nve1, State: Up, encapsulation: VXLAN VPC Capability: VPC-VIP-Only [not-notified] Local Router MAC: 5e00.0000.0007 Host Learning Mode: Data-Plane Source-Interface: loopback0 (primary: 1.1.1.1, secondary: 0.0.0.0) NX_OS_1# show interface nve1 nve1 is up admin state is up, Hardware: NVE MTU 9216 bytes Encapsulation VXLAN Auto-mdix is turned off RX ucast: 0 pkts, 0 bytes - mcast: 0 pkts, 0 bytes TX ucast: 0 pkts, 0 bytes - mcast: 0 pkts, 0 bytes NX_OS_1#

You can also check the VXLAN network identifier along with the peer status:

NX_OS_1# show nve vni Codes: CP - Control Plane DP - Data Plane UC - Unconfigured SA - Suppress ARP Interface VNI Multicast-group State Mode Type [BD/VRF] Flags --------- -------- ----------------- ----- ---- ------------------ ----- nve1 10100 UnicastStatic Up DP L2 [100] NX_OS_1# show nve peers detail | no-more Details of nve Peers: ---------------------------------------- Peer-Ip: 1.1.1.2 NVE Interface : nve1 Peer State : Up Peer Uptime : 00:04:48 Router-Mac : n/a Peer First VNI : 10100 Time since Create : 00:04:48 Configured VNIs : 10100 Provision State : add-complete Route-Update : Yes Peer Flags : None Learnt CP VNIs : 10100 Peer-ifindex-resp : Yes ---------------------------------------- Peer-Ip: 1.1.1.3 NVE Interface : nve1 Peer State : Up Peer Uptime : 00:04:48 Router-Mac : n/a Peer First VNI : 10100 Time since Create : 00:04:48 Configured VNIs : 10100 Provision State : add-complete Route-Update : Yes Peer Flags : None Learnt CP VNIs : 10100 Peer-ifindex-resp : Yes ---------------------------------------- NX_OS_1#

Everything looks fine, so a ping from R1 to R2 and R3 should be successful:

R1#ping 100.100.100.2 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 100.100.100.2, timeout is 2 seconds: .!!!! Success rate is 80 percent (4/5), round-trip min/avg/max = 17/18/19 ms R1#ping 100.100.100.3 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 100.100.100.3, timeout is 2 seconds: .!!!! Success rate is 80 percent (4/5), round-trip min/avg/max = 18/18/19 ms R1#show ip arp Protocol Address Age (min) Hardware Addr Type Interface Internet 100.100.100.1 - fa16.3ebd.45fa ARPA GigabitEthernet0/1 Internet 100.100.100.2 0 fa16.3eae.df08 ARPA GigabitEthernet0/1 Internet 100.100.100.3 0 fa16.3efb.a5a3 ARPA GigabitEthernet0/1 R1#

As you can see, R1 gets the ARP entries as if they all three routers were in the normal VLAN.

The MAC address table on NX_OS_1 looks like this and it helps to understand which MAC was learnt via direct connection (for R1) and which ones were learned over the overlay interface and from which peer:

NX_OS_1# show system internal l2fwder mac Legend: * - primary entry, G - Gateway MAC, (R) - Routed MAC, O - Overlay MAC age - seconds since last seen,+ - primary entry using vPC Peer-Link, (T) - True, (F) - False, C - ControlPlane MAC VLAN MAC Address Type age Secure NTFY Ports ---------+-----------------+--------+---------+------+----+------------------ * 100 fa16.3efb.a5a3 dynamic 00:03:48 F F (0x47000002) nve-peer2 1.1.1.3 * 100 fa16.3eae.df08 dynamic 00:03:52 F F (0x47000001) nve-peer1 1.1.1.2 * 100 fa16.3ebd.45fa dynamic 00:05:24 F F Eth1/2 NX_OS_1#

Observe that the MAC type is dynamic.

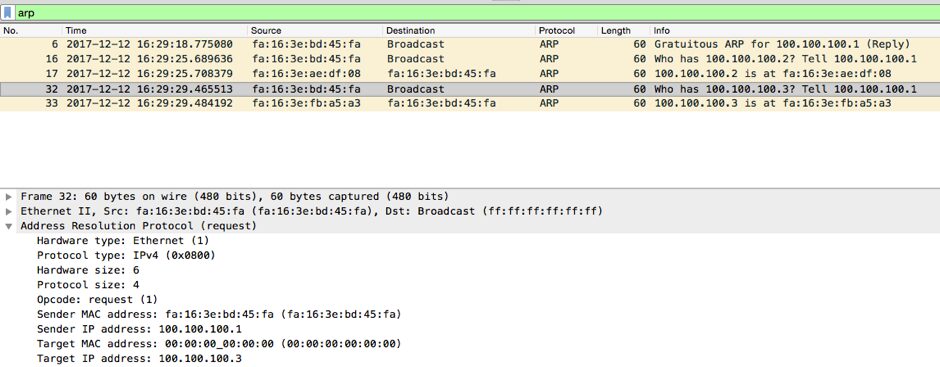

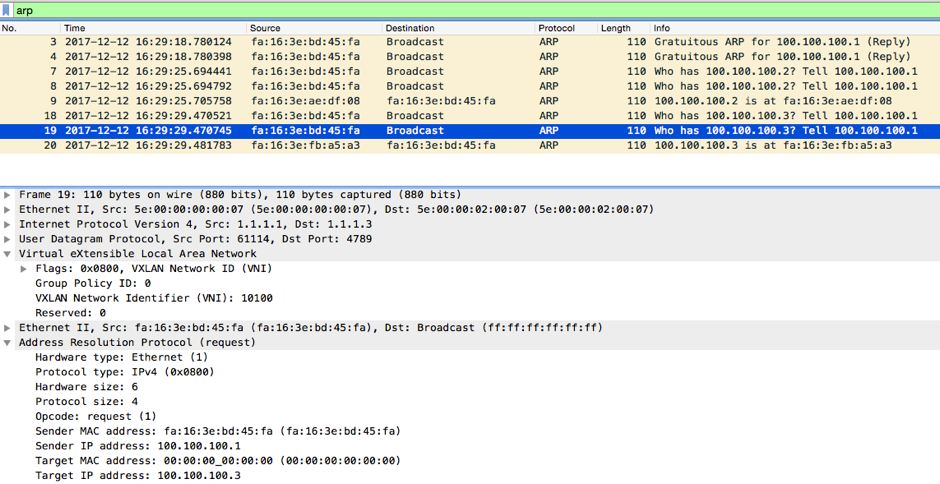

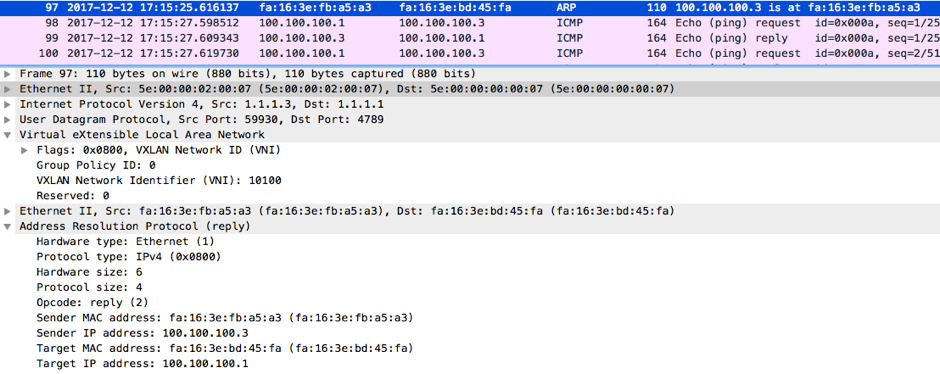

Here is a packet capture done on NX_OS_1 side on eth1/2(the interface towards R1) and showing an ARP Request from R1 trying to resolve the ARP for R3:

Next is a packet capture on NX_OS_1 on interface eth1/1(towards NX_OS_4) showing that the same ARP packet is encapsulated with VXLAN:

You can clearly see the VXLAN header encapsulating the original frame received from R1 on eth1/2.

And this would be everything about VXLAN using unicast.

Next, we will cover the VXLAN implementation with multicast control plane and from the underlay point of view, nothing changed with the exception that PIM was added with NX_OS_4 as RP for a group used for VXLAN:

This is the configuration on NX_OS_1 and all the other devices have identical configuration:

NX_OS_1# show running-config | section pim feature pim ip pim rp-address 1.1.1.4 group-list 226.0.0.0/24 ip pim ssm range 232.0.0.0/8 ip pim sparse-mode ip pim sparse-mode NX_OS_1# show running-config interface lo0 !Command: show running-config interface loopback0 !Time: Tue Dec 12 15:07:04 2017 version 7.0(3)I6(1) interface loopback0 ip address 1.1.1.1/32 ip router ospf 1 area 0.0.0.0 ip pim sparse-mode NX_OS_1# show running-config interface e1/1 !Command: show running-config interface Ethernet1/1 !Time: Tue Dec 12 15:07:11 2017 version 7.0(3)I6(1) interface Ethernet1/1 no switchport mtu 9216 ip address 10.10.14.1/24 ip router ospf 1 area 0.0.0.0 ip pim sparse-mode no shutdown NX_OS_1#

The configuration pertaining to VXLAN using multicast is almost identical with the one using unicast.

The difference is that ingress-replication was removed and a multicast group was added:

NX_OS_1# show running-config nv overlay !Command: show running-config nv overlay !Time: Tue Dec 12 15:04:42 2017 version 7.0(3)I6(1) feature nv overlay interface nve1 no shutdown source-interface loopback0 member vni 10100 mcast-group 226.0.0.100 NX_OS_1#

Independent of the overlay interface configuration, the underlying PIM infrastructure should work. These are the PIM neighbors of NX_OS_4(RP):

NX_OS_4# show ip pim neighbor PIM Neighbor Status for VRF "default" Neighbor Interface Uptime Expires DR Bidir- BFD Priority Capable State 10.10.14.1 Ethernet1/1 00:20:19 00:01:38 1 yes n/a 10.10.24.2 Ethernet1/2 00:20:15 00:01:31 1 yes n/a 10.10.34.3 Ethernet1/3 00:20:12 00:01:31 1 yes n/a NX_OS_4#

This is the multicast routing table on NX_OS_1:

NX_OS_1# show ip mroute | no-more IP Multicast Routing Table for VRF "default" (*, 226.0.0.100/32), uptime: 00:32:34, ip pim nve Incoming interface: Ethernet1/1, RPF nbr: 10.10.14.4, uptime: 00:30:52 Outgoing interface list: (count: 1) nve1, uptime: 00:06:29, nve (1.1.1.1/32, 226.0.0.100/32), uptime: 00:16:48, ip mrib pim nve Incoming interface: loopback0, RPF nbr: 1.1.1.1, uptime: 00:16:48 Outgoing interface list: (count: 1) Ethernet1/1, uptime: 00:07:37, pim (1.1.1.2/32, 226.0.0.100/32), uptime: 00:16:34, ip mrib pim nve Incoming interface: Ethernet1/1, RPF nbr: 10.10.14.4, uptime: 00:16:34 Outgoing interface list: (count: 1) nve1, uptime: 00:06:29, nve (1.1.1.3/32, 226.0.0.100/32), uptime: 00:16:32, ip mrib pim nve Incoming interface: Ethernet1/1, RPF nbr: 10.10.14.4, uptime: 00:16:32 Outgoing interface list: (count: 1) nve1, uptime: 00:06:29, nve (*, 232.0.0.0/8), uptime: 00:31:11, pim ip Incoming interface: Null, RPF nbr: 0.0.0.0, uptime: 00:31:11 Outgoing interface list: (count: 0) NX_OS_1#

And this is from RP. Observe for instance, that for a packet that comes from 1.1.1.1 and destined to 226.0.0.100, the packet should be forwarded on eth1/2(NX_OS_2) and eth1/3(NX_OS_3). Also, from any source towards 226.0.0.100, the packets should be forwarded to all the other NEXUS devices:

NX_OS_4# show ip mroute IP Multicast Routing Table for VRF "default" (*, 226.0.0.100/32), uptime: 00:08:15, pim ip Incoming interface: loopback0, RPF nbr: 1.1.1.4, uptime: 00:08:15 Outgoing interface list: (count: 3) Ethernet1/2, uptime: 00:06:06, pim Ethernet1/1, uptime: 00:06:07, pim Ethernet1/3, uptime: 00:08:15, pim (1.1.1.1/32, 226.0.0.100/32), uptime: 00:08:15, pim mrib ip Incoming interface: Ethernet1/1, RPF nbr: 10.10.14.1, uptime: 00:08:15, internal Outgoing interface list: (count: 2) Ethernet1/2, uptime: 00:06:06, pim Ethernet1/3, uptime: 00:08:15, pim (1.1.1.2/32, 226.0.0.100/32), uptime: 00:08:15, pim mrib ip Incoming interface: Ethernet1/2, RPF nbr: 10.10.24.2, uptime: 00:08:15, internal Outgoing interface list: (count: 2) Ethernet1/1, uptime: 00:06:07, pim Ethernet1/3, uptime: 00:08:15, pim (1.1.1.3/32, 226.0.0.100/32), uptime: 00:08:15, pim ip Incoming interface: Ethernet1/3, RPF nbr: 10.10.34.3, uptime: 00:08:15, internal Outgoing interface list: (count: 2) Ethernet1/2, uptime: 00:06:06, pim Ethernet1/1, uptime: 00:06:07, pim (*, 232.0.0.0/8), uptime: 00:29:07, pim ip Incoming interface: Null, RPF nbr: 0.0.0.0, uptime: 00:29:07 Outgoing interface list: (count: 0) NX_OS_4#

This is the VXLAN network identifier and now it shows the multicast group:

NX_OS_1# show nve vni Codes: CP - Control Plane DP - Data Plane UC - Unconfigured SA - Suppress ARP Interface VNI Multicast-group State Mode Type [BD/VRF] Flags --------- -------- ----------------- ----- ---- ------------------ ----- nve1 10100 226.0.0.100 Up DP L2 [100] NX_OS_1#

A ping from R1 to R2 and R3 is successful:

R1(config-if)#do ping 100.100.100.2 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 100.100.100.2, timeout is 2 seconds: .!!!! Success rate is 80 percent (4/5), round-trip min/avg/max = 18/19/21 ms R1(config-if)#do ping 100.100.100.3 Type escape sequence to abort. Sending 5, 100-byte ICMP Echos to 100.100.100.3, timeout is 2 seconds: .!!!! Success rate is 80 percent (4/5), round-trip min/avg/max = 18/19/21 ms R1(config-if)#do show ip arp Protocol Address Age (min) Hardware Addr Type Interface Internet 100.100.100.1 - fa16.3ebd.45fa ARPA GigabitEthernet0/1 Internet 100.100.100.2 3 fa16.3eae.df08 ARPA GigabitEthernet0/1 Internet 100.100.100.3 0 fa16.3efb.a5a3 ARPA GigabitEthernet0/1 R1(config-if)#

Also, the MAC address table looks the same like before:

NX_OS_1# show system internal l2fwder mac Legend: * - primary entry, G - Gateway MAC, (R) - Routed MAC, O - Overlay MAC age - seconds since last seen,+ - primary entry using vPC Peer-Link, (T) - True, (F) - False, C - ControlPlane MAC VLAN MAC Address Type age Secure NTFY Ports ---------+-----------------+--------+---------+------+----+------------------ * 100 fa16.3efb.a5a3 dynamic 00:02:57 F F (0x47000002) nve-peer2 1.1.1.3 * 100 fa16.3eae.df08 dynamic 00:03:21 F F (0x47000001) nve-peer1 1.1.1.2 * 100 fa16.3ebd.45fa dynamic 00:03:31 F F Eth1/2 NX_OS_1#

Again, the type of the MAC is dynamic like in the unicast control-plane.

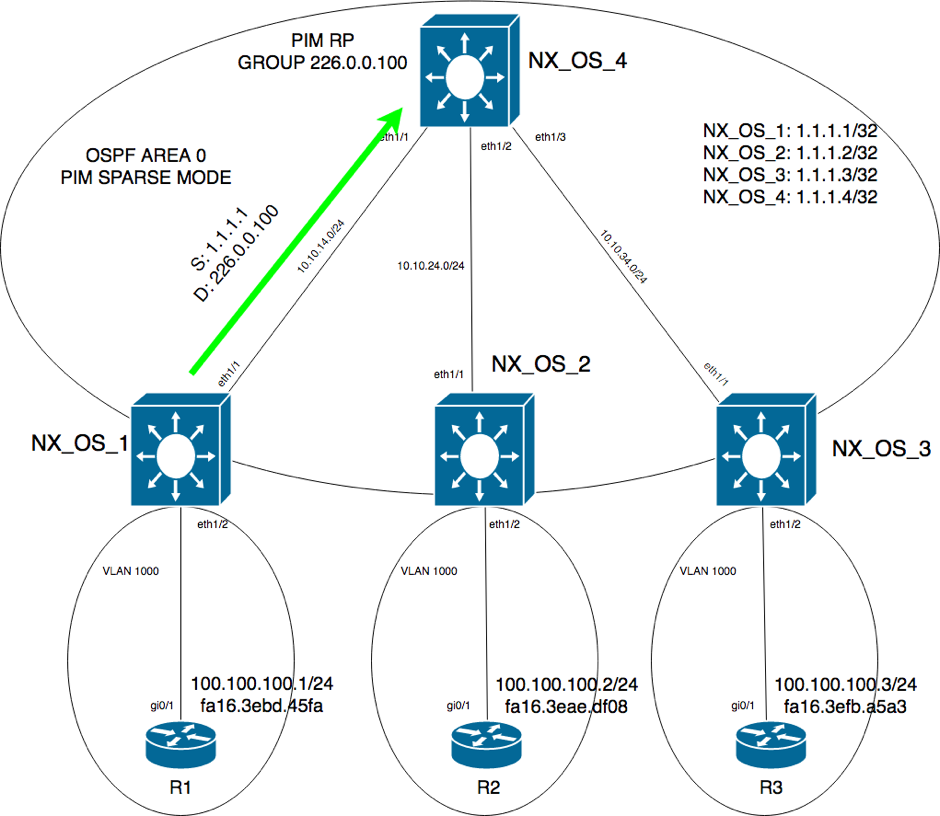

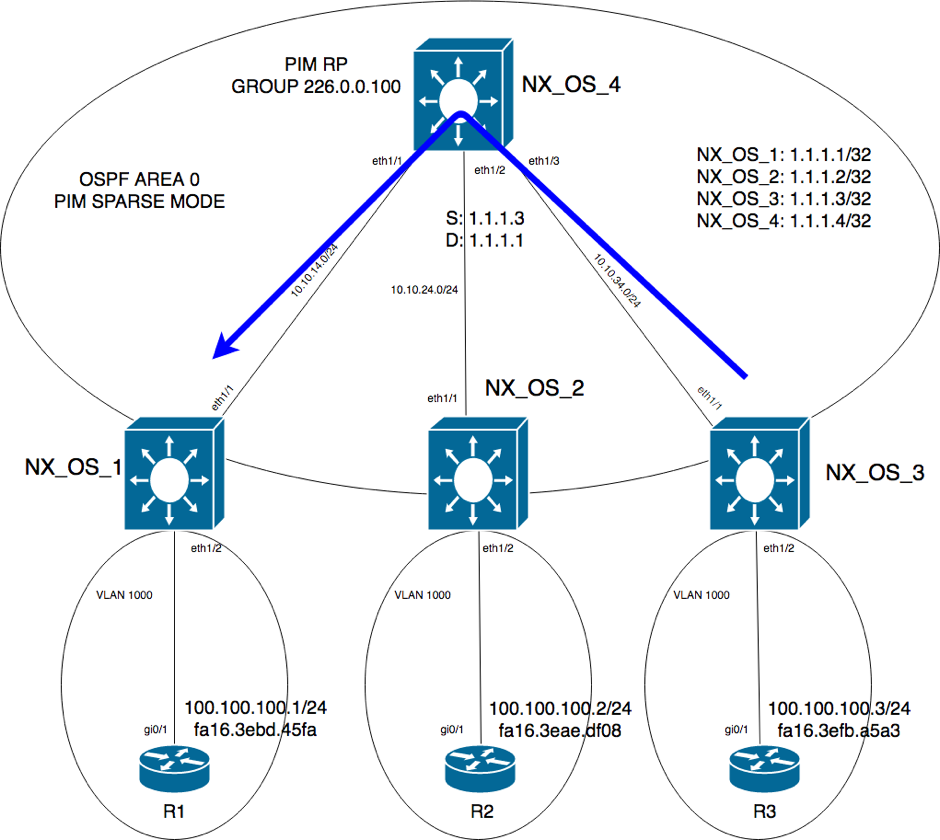

The following is the traffic flow and VTEP discovery for ARP Request/ARP Reply.

The ARP Request is sent by the end host and reaches the NX_OS_1.

NX_OS_1 will send the ARP Request encapsulated using its loopback IP address as source and the multicast group as destination:

This is a packet capture on eth1/1 on NX_OS_1 showing the ARP Request leaving. Notice the Src/Dst IP of the packet:

Next, after the packet reaches the RP, the RP will forward the packet to all interfaces on which a PIM Join for 226.0.0.100 group was received:

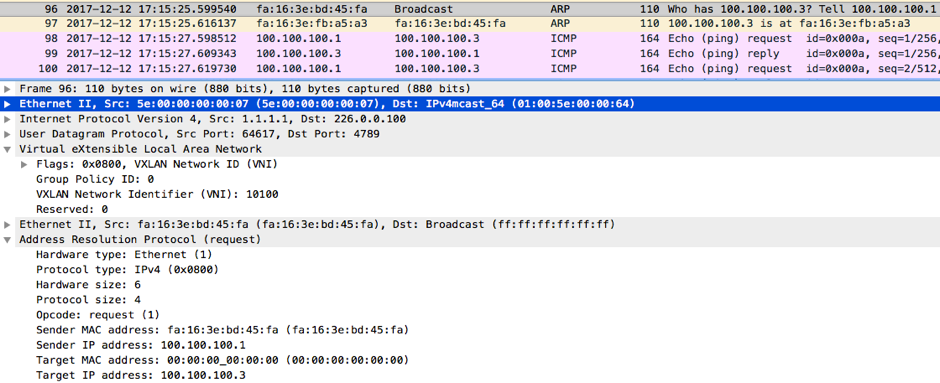

After the packet reaches NX_OS_3(NX_OS_3 will know about NX_OS_3 at this moment) and it is de-encapsulated and sent to R3, R3 will send an ARP Reply to NX_OS_3. Next NX_OS_3 will encapsulate the ARP Reply in a unicast packet and send it directly to NX_OS_1:

This is a packet capture on NX_OS_1 showing the ARP Reply coming from NX_OS_3:

And this is pretty much about how VXLAN using multicast is implemented and how the data forwarding happens.

To sum up, some of the:

-

- Advantages for:

- Unicast control-plane:

- Controlled deployment of VTEP

- Easier troubleshooting

- Multicast control-plane:

- Reduced operational overhead

- Scalability

- Simplicity

- Unicast control-plane:

- Disadvantages for:

- Unicast control-plane:

- Increased operational burden

- Prone to configuration errors

- Each peer must be configured on every VTEP

- Multicast control-plane:

- Each VNI use one multicast group

- Possible Increased complexity due to PIM usage

- Unicast control-plane:

- Advantages for:

Reference:

1. A Summary of Cisco VXLAN Control Planes: Multicast, Unicast, MP-BGP EVPN

2. Configure VxLAN Flood And Learn Using Multicast Core

Thank you to Paris Arau for his contributions to this article.