Seven best practices to keep your NTP resilient

When NTP, which synchronizes network clocks, gets off-kilter, DNS and other network disruptions follow. Keep your NTP in shape with BlueCat’s expert tips.

What is the worst thing you can imagine happening to your network when NTP is off-kilter?

BlueCat recently asked a small group of IT professionals, who came up with a few troubling scenarios: Your security logs are suddenly unreliable. DNS zone transfers can’t occur. Active Directory domain controller services shut down. A sudden loss of DNSSEC.

When NTP is malfunctioning and system clocks are out of sync, everything stops.

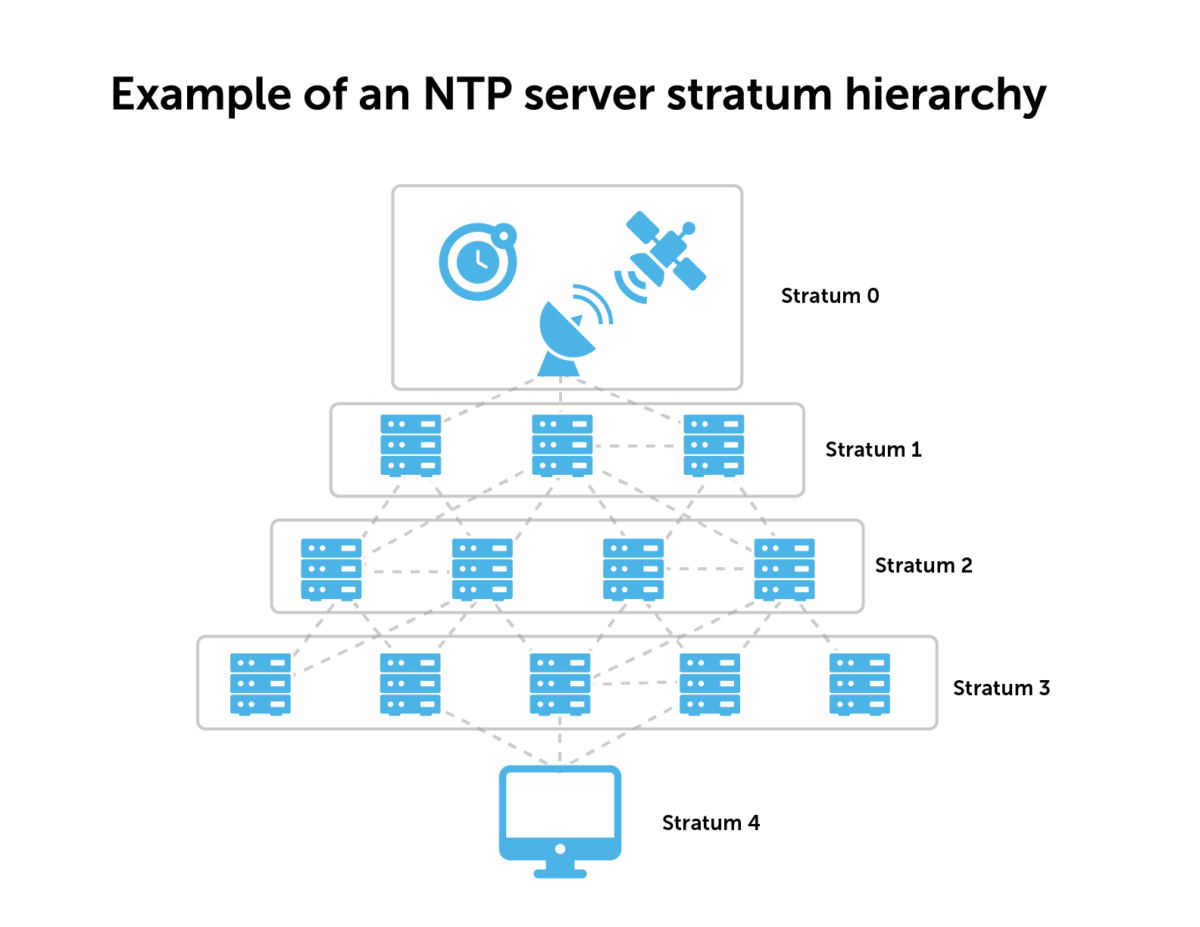

Network Time Protocol (NTP) is the protocol for synchronizing clocks between computers on a network. Designed by David L. Mills, NTP synchronizes computer systems to within a few milliseconds of Coordinated Universal Time (UTC). It uses a hierarchical system of time sources, with each level called a stratum. High-precision time devices like atomic clocks, GPS, or other radio clocks are stratum 0.

NTP may be as old as Nintendo (happy 35th anniversary, Super Mario Brothers!), but it’s still just as crucial to a functioning network. But for all its importance, NTP is a detail easily overlooked or misconfigured.

A small group of IT professionals who are part of our open DDI and DNS expert conversations recently discussed these ideas. All are welcome to join Network VIP on Slack. Below, we’ll explore seven best practices to keep your NTP resilient and avoid network disruptions.

Seven best practices for NTP resiliency

1. Have at least four NTP servers

Each network system should have at least four NTP servers, and preferably more. How many, exactly, depends on your particular network infrastructure. There is no one correct answer.>

Just one is never a good idea—if it fails, you’re up the creek.

But even just two can create what is termed the two-clock problem. If one NTP server says 11:00 and one says 12:00, which is right? It’s rather difficult to tell. If you add a third, NTP can work out which is probably the right one.

However, if you’ve got three and then one of those fails or goes offline, you’re down to two again and back to the two-clock problem. Going to at least four NTP servers avoids this dilemma.

But even four might not be the right number. Say you have four GPS receivers that you’ve configured on all your DNS and DHCP servers and your IP address management tool (for BlueCat users, this would be your BDDSes and Address Manager). Two of them are in Europe and two of them are in North America.

What happens if your link between Europe and North America goes down? Then you have the two-clock problem in two places.

2. Consider your network layout

How to implement NTP is not a simple decision. You must consider the entirety of your network infrastructure to find that magic number and configuration for true NTP resilience.

You must be careful to not make NTP structure too deep, either. After stratum 1, you might have a distribution layer at stratum 2, and then a third distribution layer at stratum 3 before getting to end clients. But each layer gets further from the clock source and adds more uncertainty to the time.

That’s not to say three strata is fine and four is bad or four is fine and five is bad. It all depends on your network infrastructure.

3. Ensure stratum architecture is consistent

Whatever stratum hierarchy you have architected, be sure that is consistent across your enterprise. For example, if you have a BDDS at stratum 3, ensure that all your BDDSes are at stratum 3 at all locations. Machines performing the same role should not reside in different strata.

4. Consider site resilience

What happens if you’ve got a critical factory site that must survive and keep producing even if it’s isolated from the rest of your organization? That site has to be able to cope on its own if it’s totally isolated.

It’s all well and good having everything else redundant on that particular site. But if isolation occurs and it doesn’t have good time sources, things aren’t going to work very well for long.

5. Don’t use the NTP pool exclusively

The NTP pool is essentially an NTP server for everyone. It’s a collection of networking computers that volunteer to provide accurate time via NTP to clients across the world. Machines in the pool are part of the ntppool.org domain.

Without a doubt, the NTP pool project and public NTP servers are a great thing. They’re used by hundreds of millions of systems and especially helpful for average internet users.

But in an enterprise environment, you might a large number of DNS and DHCP servers that need to be synchronized and communicate with hundreds of Active Directory servers. Augment them with the pool, sure, but you should never have a majority—let alone all—of your NTP sources be from the pool.

These days, enterprises that take this approach might find themselves learning a hard penny-wise, pound-foolish lesson.

In the days when GPS receivers were pricey, it perhaps made more sense to rely on the NTP pool as a way to save money. The NTP pool, while a valuable resource, has no enforceable service-level agreement or contract with your organization. Additionally, should you have an internet outage, you could experience further internal issues with NTP.

6. When it comes to redundancy, latency matters

Each DNS and DHCP server on your network should have similar latency to an uplink. Likewise, all the latencies between your strata should follow a similar latency scale. That can often be achieved by placing servers in close geographical proximity, but don’t solely rely on it. Geographical proximity alone can be misleading, depending on your location. (For example, there is a much higher latency from Vancouver to Seattle than there is from Toronto to New Jersey. But the latter pair of locations is near quadruple the distance apart.)

It is more critical that all DNS servers are running on the same time rather than running on the right time. If all your DNS servers are off by four hours from UTC, that’s not a problem. But if all are off by four hours except one, that’s a problem. It’s crucial for DNS and DHCP server clocks to be in sync across your enterprise.

7. Avoid time loops

Time loops are when server A talks to server B, which talks to server C, which is then requesting time from server A again. Don’t create one.

NTP monitoring and troubleshooting challenges

Monitoring the health of NTP is hard. It isn’t as if your server clock will suddenly be 15 hours, 46 minutes, and 33 seconds off. When NTP starts to fail or you don’t have enough good reference clocks, your clocks will slowly drift. And, after a period of time, it will cross thresholds that unleash problems that give you a clue about the underlying cause. For example, if NTP drifts more than five minutes out, GSS-TSIG will fail. As one of BlueCat’s customers put it,

My consumer base all the time thinks that the time is the time, and shouldn’t everything automatically already know it, and can’t you just put a monitor on it that says when the time’s wrong? And when you get down into the actual weeds of it… Since NTP as a protocol and as a service is so dependent on your network topology and the traversal of that topology, all of the variances that they put into the math to figure out how to shift the clock, when to shift the clock, and how much to shift the clock, if you really look at that and put your math hat on, doing a monitoring thing for that, that is huge.

It’s also tough to clean up from NTP-related failures. Again, one of BlueCat’s customers said it best:

Your DNS is torqued, no doubt about it. But everything else stops. Really, the nightmare isn’t what happens when time is screwed up. It’s what is your disaster plan for how do you go about fixing it to get everything back up? That’s the nightmare. Not that you lost it—that’s bad. The nightmare is, how do you recover?